In the ever-evolving landscape of cloud computing, Kubernetes Autoscaling has become a cornerstone for businesses seeking seamless scalability, efficiency, and cost optimization. As we step into 2025, new trends and best practices are shaping the way enterprises manage their Kubernetes workloads. In this blog, we explore the latest advancements in Kubernetes Autoscaling and how businesses can leverage them to enhance performance and efficiency.

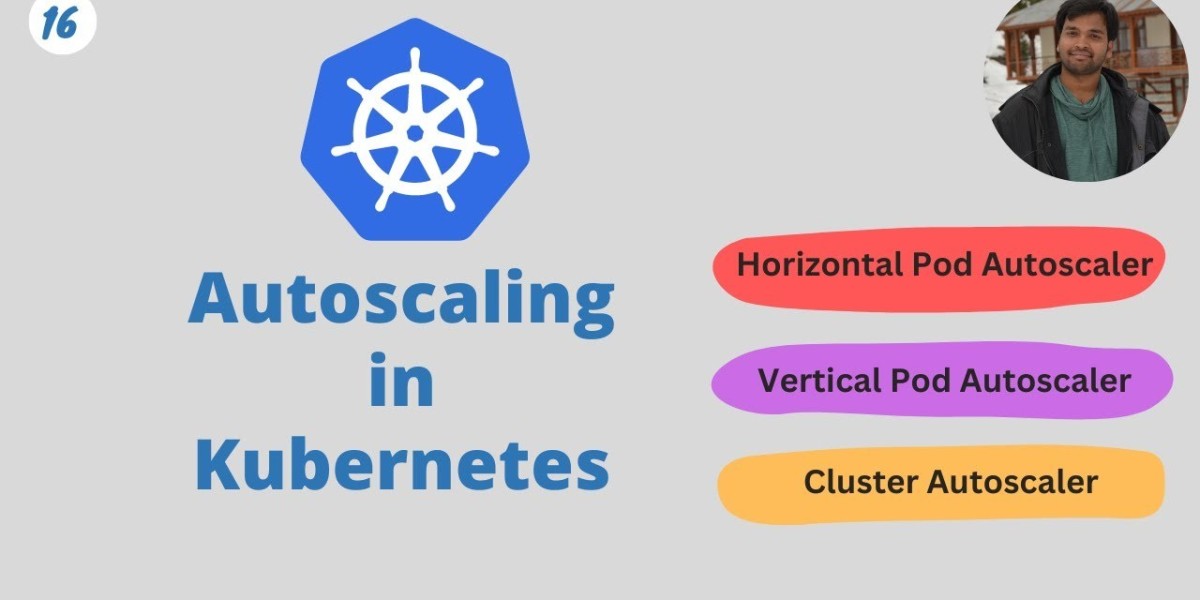

Understanding Kubernetes Autoscaling

Kubernetes Autoscaling is a powerful feature that enables applications to dynamically scale based on workload demands. It consists of three key components:

Horizontal Pod Autoscaler (HPA): Adjusts the number of pods in a deployment based on CPU, memory, or custom metrics.

Vertical Pod Autoscaler (VPA): Dynamically adjusts resource requests and limits for individual pods.

Cluster Autoscaler (CA): Scales the number of worker nodes in a Kubernetes cluster up or down based on pending pods.

These mechanisms allow businesses to maintain optimal performance while reducing infrastructure costs.

Latest Trends in Kubernetes Autoscaling for 2025

1. AI-Driven Autoscaling

With the rise of artificial intelligence (AI) and machine learning (ML), AI-driven Kubernetes Autoscaling is becoming more prevalent. AI-powered solutions analyze historical data, predict traffic spikes, and optimize resource allocation in real-time, reducing latency and improving efficiency.

2. Multi-Cloud and Hybrid Cloud Scaling

As businesses embrace multi-cloud and hybrid cloud strategies, Kubernetes Autoscaling has evolved to support dynamic scaling across different cloud providers. Platforms like Kapstan offer intelligent autoscaling solutions that help enterprises balance workloads across AWS, Azure, and Google Cloud seamlessly.

3. KEDA for Event-Driven Scaling

Kubernetes Event-Driven Autoscaling (KEDA) has gained traction as businesses move towards serverless and event-driven architectures. KEDA enables autoscaling based on event-driven triggers such as message queues, Kafka topics, or custom metrics, making it a game-changer for modern applications.

4. Cost-Aware Autoscaling Strategies

With the increasing focus on cost optimization, enterprises are implementing cost-aware autoscaling strategies. By leveraging tools like Kubernetes Cost Monitoring and Rightsizing, businesses can fine-tune autoscaling policies to reduce cloud expenses without compromising performance.

5. Serverless Kubernetes Scaling

The shift towards serverless Kubernetes is another major trend in 2025. Platforms like Knative allow businesses to run workloads with autoscaling that adjusts to zero when idle, significantly optimizing resource usage.

Best Practices for Kubernetes Autoscaling in 2025

To fully leverage Kubernetes Autoscaling, businesses should adopt these best practices:

1. Optimize Metrics Selection

Choosing the right metrics for autoscaling is crucial. While CPU and memory are common choices, consider using custom metrics like request latency, queue length, or application-specific KPIs to ensure precise scaling decisions.

2. Set Proper Scaling Limits

Uncontrolled autoscaling can lead to excessive costs or degraded performance. Define appropriate min and max limits for HPA, VPA, and Cluster Autoscaler to maintain stability and prevent unnecessary scaling events.

3. Implement Predictive Autoscaling

Leverage AI-powered predictive analytics to anticipate traffic patterns and scale resources proactively. Kapstan provides intelligent autoscaling solutions that help businesses prepare for seasonal traffic surges and unexpected spikes.

4. Leverage KEDA for Event-Based Workloads

For businesses dealing with event-driven applications, integrating KEDA can enhance responsiveness and resource efficiency. By autoscaling based on event triggers, organizations can ensure optimal performance while reducing costs.

5. Regularly Test and Monitor Autoscaling Policies

Autoscaling configurations should be continuously monitored and tested. Implement observability tools like Prometheus, Grafana, and Kubernetes Metrics Server to track performance, analyze trends, and make data-driven improvements.

6. Integrate Cost Optimization Tools

To avoid over-provisioning and unnecessary cloud spending, use cost optimization tools such as Kubecost or AWS Cost Explorer in combination with Kubernetes Autoscaling. This ensures a balance between performance and financial efficiency.

How Kapstan Helps Businesses with Kubernetes Autoscaling

At Kapstan, we specialize in providing advanced Kubernetes Autoscaling solutions tailored for modern enterprises. Our platform offers:

AI-powered predictive autoscaling for proactive workload management.

Multi-cloud autoscaling to balance workloads across AWS, Azure, and Google Cloud.

Cost optimization tools to help businesses reduce unnecessary cloud expenses.

Seamless KEDA integration for event-driven scaling.

Real-time monitoring and insights to ensure peak performance.

By leveraging Kapstan’s expertise, businesses can achieve high availability, cost efficiency, and seamless scalability with Kubernetes.

Conclusion

As Kubernetes Autoscaling continues to evolve in 2025, businesses must stay ahead by adopting AI-driven strategies, event-driven scaling, and cost-aware autoscaling solutions. Implementing best practices such as optimal metrics selection, predictive scaling, and cost optimization will ensure smooth operations and maximum efficiency.

With Kapstan, enterprises can embrace the latest trends in Kubernetes Autoscaling and future-proof their cloud infrastructure. Contact us today to explore how our intelligent autoscaling solutions can transform your Kubernetes workloads!